9 Best Natural Language Processing with Python Libraries in 2024

Natural language processing with Python (NLP) resides at the crossroads of data science and artificial intelligence (AI), fundamentally aimed at enabling machines to comprehend human languages and derive meaning from textual content.

Numerous organizations’ growing interest in NLP stems from its potential to unlock various insights and solutions for language-based challenges that consumers may encounter with products.

Given the complexity of NLP, developers require the finest tools available to effectively apply NLP techniques and algorithms, thereby developing services proficient in processing natural languages.

Natural Language Processing with Python (NLP) is a branch of computer science and AI that enables computers to interpret, understand, and generate human language in written and spoken forms. It integrates computational linguistics, which involves rule-based modeling of human language, with advanced algorithms from statistical, machine learning, and deep learning disciplines.

NLP aims to allow computers to comprehend the full nuances of human language, including the intent and emotions conveyed by the speaker or writer. Applications of NLP include translating languages, executing voice commands, summarizing texts, and more, making it a cornerstone technology in both consumer applications like digital assistants and professional solutions aimed at improving business efficiency and productivity.

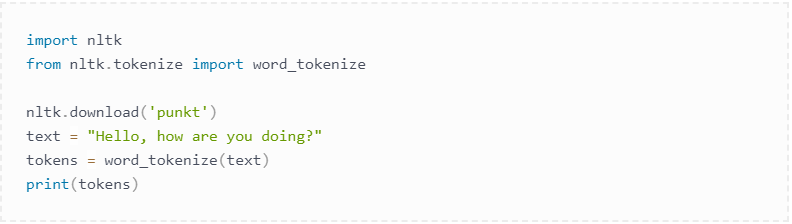

The Natural Language Toolkit (NLTK) is a comprehensive library for Python Natural Language Processing designed to facilitate a wide range of tasks in NLP and machine learning, including classification, stemming, tagging parsing, semantic reasoning, and tokenization. As a crucial educational resource, it equips Python developers with foundational knowledge and tools, particularly those new to Natural Language Processing and machine learning.

Originating from the collaborative efforts of Steven Bird and Edward Loper at the University of Pennsylvania, the NLTK has been instrumental in pioneering NLP research and is now incorporated into academic syllabi worldwide, reflecting its significance and utility in the field.

Despite its broad applicability and versatility, the NLTK is recognized for its complexity and challenges in practical Natural Language Processing using Python, particularly due to its slower performance in rapid production environments and a steep learning curve. Nevertheless, it offers valuable resources, such as the NLTK book, to assist developers in understanding and navigating the intricacies of language processing tasks facilitated by the toolkit.

Use-case: Tokenization of text.

NLTK

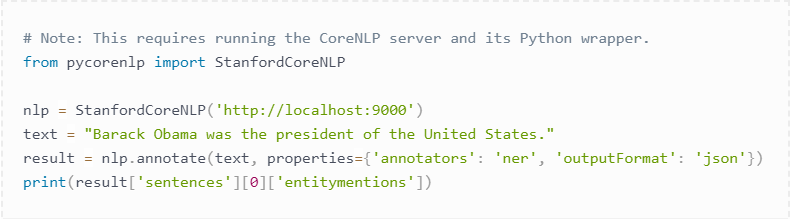

CoreNLP is a Java-based library, serving as a Natural Language Processing Python example, developed by Stanford University, notable for its precision in Natural Language parsing and comprehensive linguistic annotations. It offers high-speed performance, making it particularly effective in product development contexts.

The library is acclaimed for its robustness and versatility in executing tasks such as named entity recognition and coreference resolution. Furthermore, CoreNLP can be integrated with the Natural Language Toolkit (NLTK) to augment its functionality, thereby enhancing NLTK’s overall efficiency in processing Natural Language tasks.

Use-case: Named Entity Recognition (NER).

coreNLP

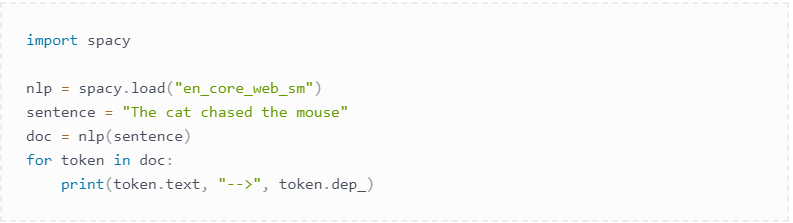

spaCy is defined as a contemporary library illustrating an NLP Python example specifically engineered for production use. It distinguishes itself by its user accessibility compared to other Python NLP libraries, such as NLTK. It is renowned for providing the fastest syntactic parser currently available, enhancing its appeal for efficient processing.

Constructed using Cython, spaCy is noted for its exceptional speed and efficiency, marking it as a standout choice for performance-oriented tasks.

Despite its strengths, spaCy is recognized for its relatively limited language support, accommodating only seven languages. This characteristic is noted within the context of its comparison to other libraries. However, given the rising prominence of machine learning and NLP and spaCy’s increasing popularity, there is anticipation for expanding its language support in the foreseeable future.

Use-case: Dependency parsing of a sentence.

Spacy

See more related articles here:

What is Natural Language Processing: Overview for Beginners

Understand How Do AI Detectors Work and Make The Most Out of Them

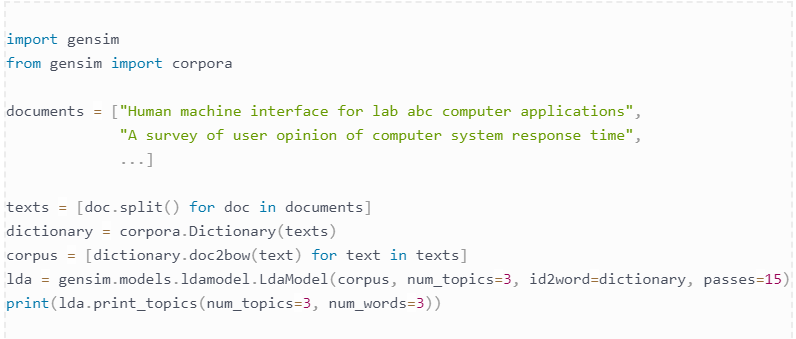

Gensim stands out among Natural Language Processing Python projects as a library designed for identifying semantic similarities between two pieces of text using vector space modeling and topic modeling techniques. Unlike other libraries focusing solely on batch and in-memory processing, Gensim excels in managing large volumes of text through efficient data streaming and incremental algorithms.

What stands out about Gensim is its minimal memory usage, optimized performance, and swift processing capabilities, largely thanks to integration with the NumPy library. Additionally, its vector space modeling functions are particularly impressive.

Use-case: Topic modeling with LDA (Latent Dirichlet Allocation).

Gensim

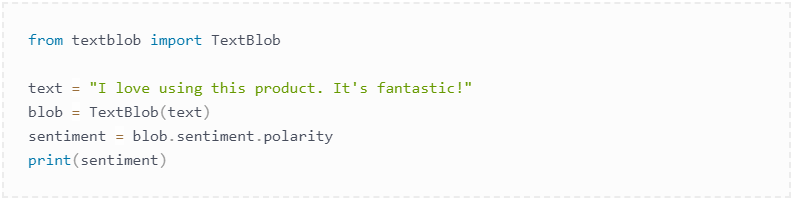

A Python library designed for developers initiating their exploration into Natural Language Processing (NLP). TextBlob simplifies interaction with fundamental NLP tasks, including sentiment analysis, part-of-speech tagging, and noun phrase extraction, by providing an accessible interface that builds upon the foundational aspects of the Natural Language Toolkit (NLTK).

While it maintains the slower processing characteristic of NLTK, TextBlob extends its functionality with additional features such as spelling correction and translation, facilitating the execution of NLP tasks without the necessity for intricate procedural knowledge.

Use-case: Sentiment analysis of a sentence.

TextBlob

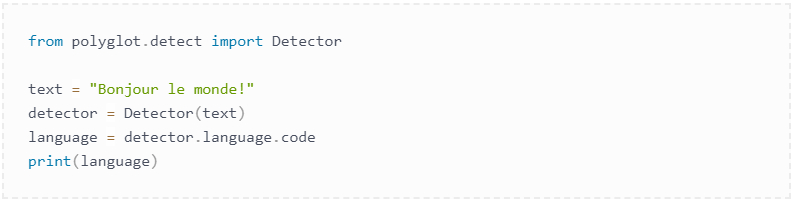

Polyglot is a library distinguished for its extensive analytical capabilities and substantial support for a wide array of languages, enhanced by its rapid performance attributed to the integration with NumPy. Functionally akin to spaCy, Polyglot is characterized by its efficiency and simplicity, rendering it an optimal solution for projects requiring linguistic support beyond the capacities of spaCy. Notably, Polyglot involves the execution of a specific command within the command line through its pipeline mechanisms, a feature that sets it apart from other libraries.

As a multilingual NLP library, Polyglot extends its utility by offering word embeddings for over 130 languages and accommodating a variety of tasks, including named entity recognition and morphological analysis, in multiple languages. This makes Polyglot a versatile and indispensable tool for multilingual project implementations.

Use-case: Language detection.

Polyglot

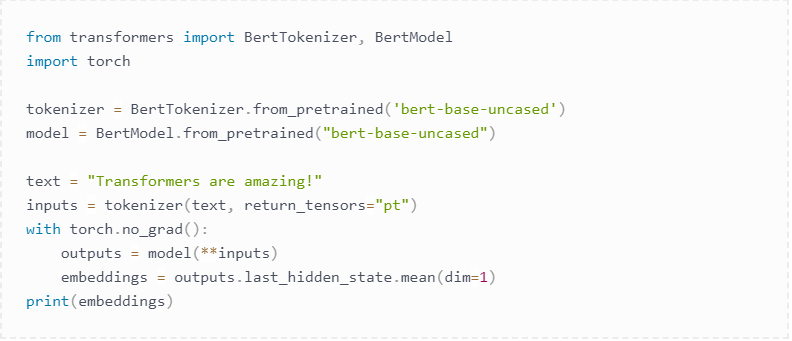

The Hugging Face Transformer is a notable entity in Natural Language Processing (NLP), emerging with the advent of transformer technology. Founded in 2016 by Julien Chaumond, Clément Delangue, and Thomas Wolf, Hugging Face is both an AI community and a machine-learning platform.

Its primary aim is to equip data scientists, AI professionals, and engineers with easy access to a comprehensive library of over 20,000 pre-trained models. These models, which are at the forefront of pre-trained technology, are accessible via the Hugging Face hub and cater to a wide range of applications, including:

Additionally, Hugging Face Transformers offer access to nearly 2000 datasets and user-friendly APIs, supported by approximately 31 libraries. This enables developers to effectively utilize these models with various deep learning frameworks, including PyTorch, TensorFlow, JAX, ONNX, Fastai, and Stable-Baseline 3.

Use-case: Using a pre-trained BERT model for sentence embedding.

The Hugging Face Transformer

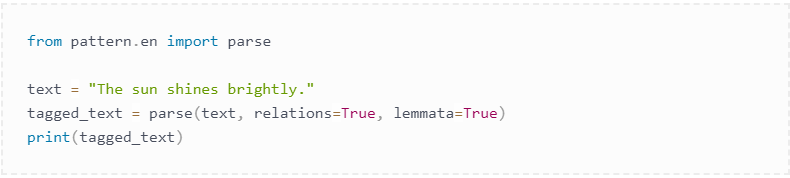

Pattern is a notable Python library for Natural Language Processing that provides functionalities such as part-of-speech tagging, sentiment analysis, vector space modeling, support vector machines (SVM), clustering, n-gram search, and WordNet integration. It includes tools like a DOM parser and a web crawler, as well as access to APIs for social networks such as Twitter and Facebook. While primarily designed for web mining, Pattern may not fully address all Natural Language Processing requirements.

Use-case: Part-of-speech tagging.

Pattern

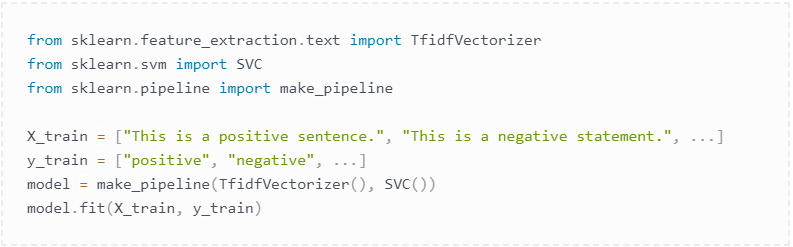

Scikit-learn is a versatile NLP library that equips developers with various algorithms for crafting machine-learning models. Thanks to its user-friendly class methods, It provides numerous functionalities for employing the bag-of-words technique to address text classification challenges.

However, it’s worth noting that scikit-learn does not incorporate neural networks in its text preprocessing capabilities. For those needing advanced preprocessing tasks such as POS tagging on text datasets, turning to alternative NLP libraries before utilizing scikit-learn for model development is advisable.

Supported by a robust community and comprehensive documentation, scikit-learn continues to be highly regarded among developers.

Use-case: Text classification using TF-IDF and Support Vector Machine.

Scikit-learn

Natural Language Processing with Python is about helping computers understand human language. From tools like NLTK for beginners to advanced options like the Hugging Face Transformer, a wide range of resources are available for various NLP tasks.

The key to success in NLP projects is selecting the appropriate tool for the job, as each has specific strengths. Understanding these tools is crucial, whether starting or looking to enhance your NLP capabilities.

If you’re looking for assistance with NLP, TECHVIFY is ready to help. Our team specializes in providing NLP solutions tailored to your needs. Contact TECHVIFY for support with your NLP projects.

Please feel free to contact us for a free consultation: Software Development Services

Q. Why Python is useful in natural language processing?

Creating NLP-based expert system prototypes with Python is straightforward and effective.

Q. What is the limitation of using NLP?

A significant challenge in applying NLP to multilingual applications is the scarcity of data for numerous languages.

Table of ContentsI. Understanding Natural Language Processing (NLP)II. List of NLP Tools and Libraries in Python1. The Natural Language Toolkit (NLTK)2. CoreNLP3. spaCy4. Gensim5. TextBlob6. Polyglot7. Hugging Face Transformer8. Pattern9. Scikit-learnConclusionFAQs In the fast-evolving world of online dating, understanding the cost to build a dating app is vital for anyone looking to break into the market. From the initial concept to the final launch, the journey requires careful planning around feature selection, platform compatibility, and user security—each of which plays a critical role in shaping both the app’s functionality and its budget. The online dating market, valued at USD 9.4…

22 October, 2024

Table of ContentsI. Understanding Natural Language Processing (NLP)II. List of NLP Tools and Libraries in Python1. The Natural Language Toolkit (NLTK)2. CoreNLP3. spaCy4. Gensim5. TextBlob6. Polyglot7. Hugging Face Transformer8. Pattern9. Scikit-learnConclusionFAQs You’ve started a business, and now you need a website. But no one on your team knows much about coding, and hiring a full-time web developer just isn’t in the cards right now. Sound familiar? If so, outsourcing your web design might be the perfect solution. Whether you’re a startup building your online presence or an established business looking for a website refresh, outsourcing can help you get there…

21 October, 2024

Table of ContentsI. Understanding Natural Language Processing (NLP)II. List of NLP Tools and Libraries in Python1. The Natural Language Toolkit (NLTK)2. CoreNLP3. spaCy4. Gensim5. TextBlob6. Polyglot7. Hugging Face Transformer8. Pattern9. Scikit-learnConclusionFAQs With much of our communication happening online, it’s no surprise that the dating world has also shifted in the same direction. In 2021, 49 million people in the U.S. alone turned to online dating services—whether to find a serious partner or just enjoy a fun date. The trend became even more pronounced in 2020, as men and women increasingly embraced online dating during pandemic lockdowns and social distancing measures….

18 October, 2024

Thank you for your interest in TECHVIFY Software.

Speed-up your projects with high skilled software engineers and developers.

By clicking the Submit button, I confirm that I have read and agree to our Privacy Policy